As someone who has worked in security for a long time, I still read many whitepapers and reports. Sometimes, it feels more like entertainment than reading something that influences how I work and can improve security daily.

The inaugural study of EPSS data and performance from Cyentia Institute and FIRST.org, “A Visual Exploration of Exploitation in the Wild” was not on the entertainment side of the scale and proved to be a great read because it provided interesting, actionable information on handling vulnerabilities at scale. The report contains close to 20 takeaways, but here are my top 6:

1. It is possible to evaluate EPSS performance for coverage and efficiency

If you’ve ever wondered (I know I have!) what EPSS values you should filter for in your vulnerability management processes in order to achieve the best possible risk reduction with the lowest amount of effort, you’re in for a treat. The report explains how EPSS performs and how it compares to simply using CVSS scores.

So many things in the security industry are done because they are “best practice” or simply because “we’ve always done it this way”. If you look at the headlines and think things are great, then feel free to continue doing the same thing over and over, but I don’t think things are great, and I know I won’t keep doing things the same old way.

2. Risk-tolerant or resource-challenged organizations might not benefit from a “once exploited, always exploited” approach

I firmly believe that if you are targeted by attackers, it does not matter if a vulnerability is six months old or six years old. Attackers in possession of an exploit will attempt to exploit the vulnerability. Having worked in security companies, fintech, and banking-sectors that are somewhat risk-averse but attractive targets for criminals, I have the resources to manage security risks. This experience probably contributes to my bias towards a “better safe than sorry” approach.

I find the report’s suggestion of prioritizing new vulnerabilities over old ones interesting. Certainly, in a resource constrained environment, it seems logical to focus on things that are currently being exploited, but the report also mentions that old vulnerabilities keep being exploited, so why gamble by leaving a system running software that is known to have been exploited in the past?

3. CVSS is not sufficient, and you could get six times more value for your buck by using EPSS

Given a finite amount of resources to fix vulnerabilities, coverage will be significantly higher by targeting issues with a relatively high EPSS score than a high CVSS score.

What is even more impressive is once you start looking at lower CVSS scores, for example, 7 and up. Many organizations focus on CVSS 7+ with SLAs tailored specifically to that, as it maps to “Highs” and “Criticals”.

In reality, if you do that, you’ll be spending six times the amount of effort you would than by using a EPSS threshold that would result in the same coverage. As EPSS is not specifically tailored to a specific organization, you can improve that further by using information about your specific context to get optimal risk reduction for the available resources.

4. Exploits are typically used constantly, but once they aren’t, they rarely flare back up

While we commonly use vulnerability age to prioritize, we should also consider what the time of last exploitation is. Research from Mandiant supports this takeaway: “exploitation is most likely to occur within the first month after an initial patch for a flaw has been released, with a total of 29 n-day vulnerabilities being exploited within the first month of being disclosed (versus 23 flaws being first exploited after the first six months).” Unfortunately, that data is not (yet?) readily available in most vulnerability management tools, but if you have the ability to augment your data with it, you will be able to make more rational decisions.

5. Time-to-exploitation is a more useful metric than severity

By using EPSS, we remove a lot of the guesswork that comes with predicting if a vulnerability will be exploited soon. For example, in many cases, a vulnerability is very unlikely to be exploited on the day that it is released, meaning that it is often counter-productive to “drop everything and patch”.

EPSS gets updated daily, showing likelihood of exploitation within the next 30 days, so make sure you use fresh data every time you look at what’s the best most valuable work to be performed.

6. We talk about prioritization a lot, and we should talk about attack surface reduction more.

There are only so many hours in a day, but there is no shortage of vulnerabilities.

However, it seems inefficient for everyone to panic about patching the same vulnerabilities rapidly. Despite prioritization, dealing with millions of vulnerabilities requires significant effort.

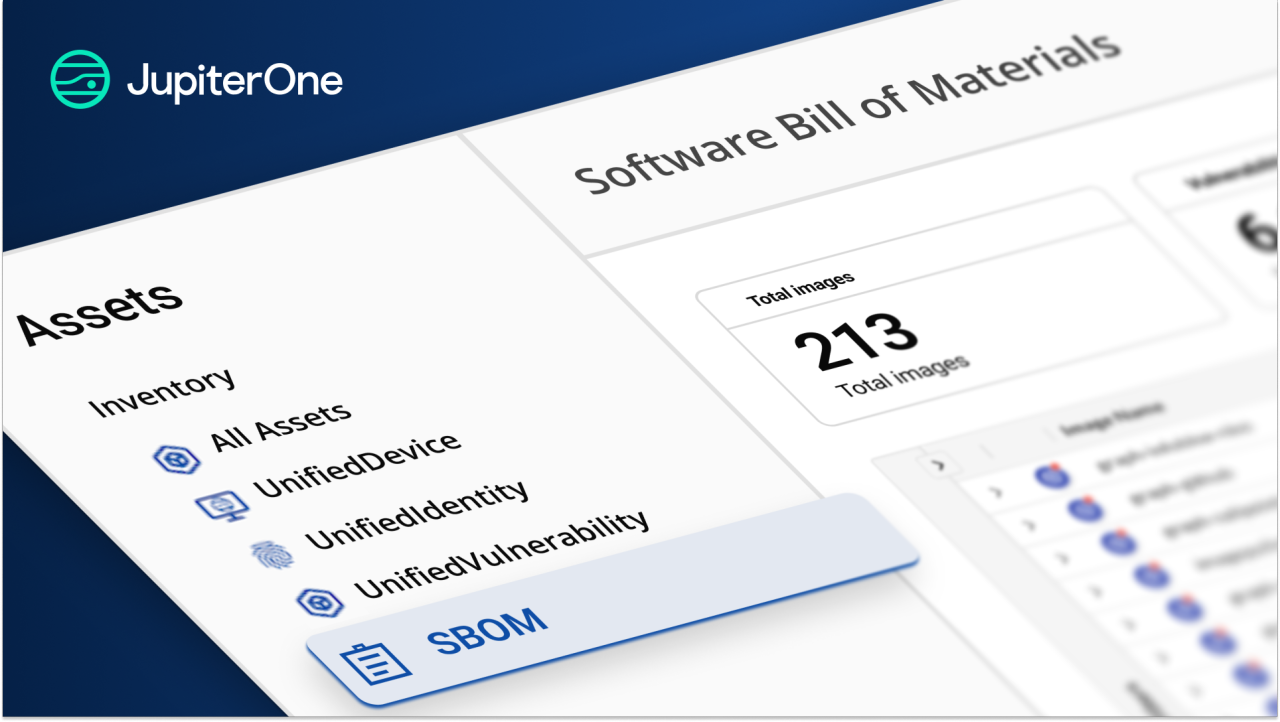

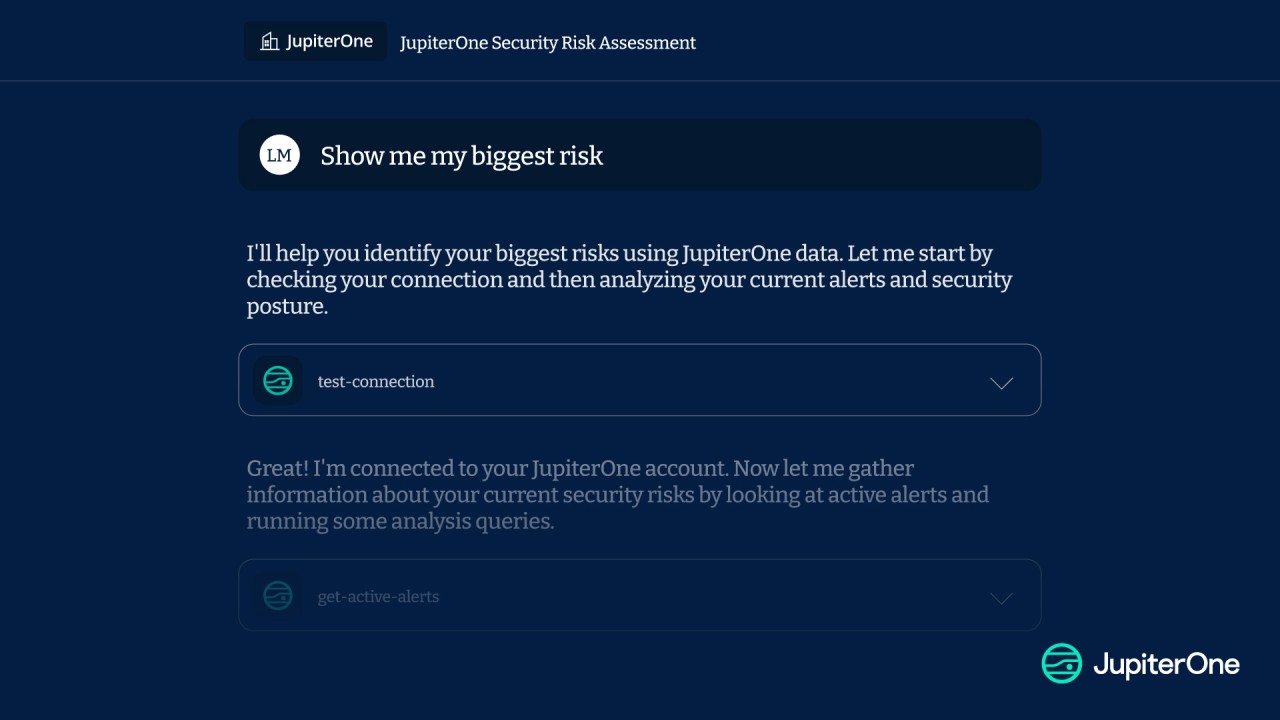

That is why I, and we at JupiterOne, are huge fans of technologies that allow for a smaller attack surface. For example, container images with minimal distributions that rarely have a CVE, cloud functions, identity aware proxies that only allow traffic from trusted devices, and more.

As an industry, we will do better if we prioritize using EPSS, and use the time we save to implement changes that reduce attack surface for the future.

Would you patch a telnet vulnerability with a CVSS of 10 and an EPSS of 1.0, or would you remove telnet, to never worry about it again?

Download and read the full “A Visual Exploration of Exploitation in the Wild” EPSS data and performance study here.